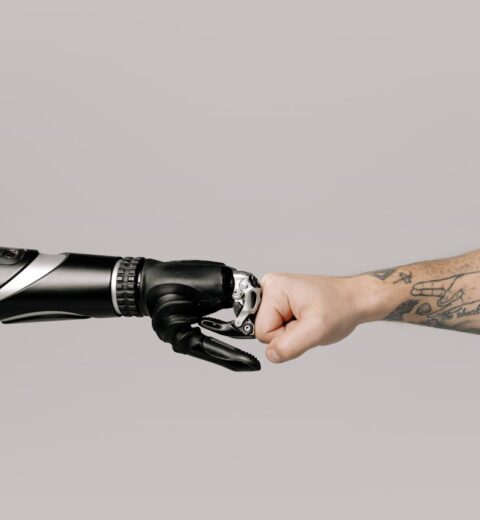

Automation has transformed the hiring process from resume screening to interview scheduling. But as tools like AI and machine learning continue to influence who gets hired, ethical questions loom large. Are candidates being treated fairly? Is data being handled responsibly? Can a machine make an unbiased decision?

If you’re using automation in your hiring process or planning to you need to understand the ethics of automated hiring. This guide breaks it down into real-world practices you can apply right now.

Key Takeaways

- Bias in AI can reinforce inequality if left unchecked.

- Transparency in how hiring tools work is crucial for trust.

- Candidates should be informed when automation is used.

- Human oversight is key to ethical hiring decisions.

- Fairness, explainability, and data privacy should guide all AI use in recruitment.

Why Ethics Matter in Automated Hiring

Automated hiring promises speed, efficiency, and scale. But when left unchecked, it can introduce or magnify bias—especially against underrepresented groups.

AI tools learn from historical data, which often reflects existing inequalities. If your data has bias, your hiring outcomes will too. Ethics in hiring automation isn’t about saying no to tech—it’s about using it responsibly.

A Gartner study predicts 80% of HR leaders will use AI in decision-making by 2025. That makes ethics not a “nice-to-have,” but a business-critical priority.

Common Ethical Risks in Automated Hiring

- Bias Amplification: AI may favor candidates who match historical hires—excluding minorities or non-traditional applicants.

- Opaque Decision-Making: Many tools function like black boxes, making it hard to explain why someone was rejected.

- Lack of Informed Consent: Candidates often don’t know their applications are screened by machines.

- Data Privacy Issues: Sensitive data may be stored or analyzed without proper safeguards.

Being aware of these risks is the first step to solving them.

Principles for Ethical AI Use in Hiring

1. Fairness and Bias Mitigation

Train AI systems on diverse, representative datasets. Regularly audit for disparate impact. Use fairness testing tools like IBM AI Fairness 360 or Google’s What-If Tool to detect bias.

Avoid using proxies like zip codes or university names, which can encode systemic bias. Review AI decisions with human input to ensure equitable outcomes.

2. Transparency and Explainability

Candidates have the right to know how they’re being evaluated. Choose AI vendors that offer explainability—so you can say, “This decision was based on X, Y, and Z.”

If you can’t explain the decision, you shouldn’t be using the tool. Transparent hiring builds trust and protects your brand.

3. Informed Candidate Consent

Always disclose when automation is part of the process. Let candidates know what data is being collected, how it’s being used, and who has access to it.

Make this clear in job postings, application forms, and privacy policies. Give candidates the option to opt out or request human review when possible.

4. Data Privacy and Security

Follow GDPR, CCPA, and other local privacy laws. Anonymize candidate data when possible and encrypt sensitive information.

Work only with vendors who follow strict data governance standards and provide audit logs. Poor data security not only breaks trust—it risks legal consequences.

5. Human Oversight and Accountability

AI should support, not replace, human judgment. Ensure that a qualified human is involved in key decision points especially rejections or final offers.

Assign clear responsibility for AI oversight within your HR or TA team. Make ethical AI part of your regular hiring reviews.

Tools for Ethical Automated Hiring

| Tool | Purpose | Ethical Feature |

|---|---|---|

| HireVue | Video interview AI | Optional human review, explainability options |

| Pymetrics | Cognitive & emotional assessment | Bias mitigation and auditing reports |

| Greenhouse | ATS with automation | Customizable workflows, DEI analytics |

| Modern Hire | Pre-hire assessments | Transparent scoring and consent features |

| Eightfold AI | Candidate matching | Bias testing, explainable insights |

Choose vendors that align with your ethics policy and provide documentation on their AI models.

Building an Ethical AI Hiring Framework

Create internal policies that address the following:

- Use Cases: When and how automation is used

- Fairness Audits: Frequency and scope of bias testing

- Candidate Communication: Standard language for transparency

- Human Review Protocols: When AI decisions require override or review

- Incident Response: What to do if the system flags unethical behavior

Include ethics as a measurable part of your recruitment KPIs. For example, track and report on fairness metrics, candidate opt-out rates, and bias remediation efforts.

Conclusion

Automation in hiring is here to stay—but how we use it defines its impact. Ethical automated hiring is about combining innovation with accountability. It means using tech to support better decisions, not faster shortcuts.

By embedding fairness, transparency, and oversight into your hiring process, you’ll attract top talent—and avoid costly ethical pitfalls. In a world where trust matters more than ever, ethical hiring isn’t just smart. It’s essential.

FAQ

Why is AI in hiring considered risky?

Because AI can unintentionally replicate bias in training data, leading to unfair decisions. It also lacks human context unless carefully managed.

How can I make automated hiring ethical?

Ensure diverse training data, use explainable AI, provide candidate transparency, and maintain human oversight throughout the process.

What laws apply to AI hiring in 2025?

Laws like GDPR, CCPA, and emerging AI-specific regulations (like the EU AI Act) apply. Local laws may also require bias audits or candidate disclosures.

Do candidates know when AI is used in hiring?

Often, they don’t. That’s why informed consent and clear disclosures are essential to ethical practice.

Can automation improve diversity in hiring?

Yes—if it’s built and monitored with fairness in mind. When used responsibly, AI can reduce bias and highlight overlooked candidates.

Also read: [What AI can and can not do in hiring]

Source Links

Gartner AI in HR Report – https://www.gartner.com/en/newsroom/press-releases/2024-ai-in-hr-trends

IBM AI Fairness 360 – https://aif360.mybluemix.net/

LinkedIn: How to Hire Ethically With AI – https://www.linkedin.com/pulse/how-hire-ethically-ai-sarah-marshall/

AI and Hiring Bias – https://hbr.org/2023/11/how-to-reduce-bias-in-ai-hiring-tools

EU AI Act Summary – https://artificialintelligenceact.eu/

SHRM: Ethical Use of AI in Hiring – https://www.shrm.org/resourcesandtools/hr-topics/technology/pages/ai-ethics-hiring.aspx